Authors:

(1) Qian Yang, Zhejiang University, Equal contribution. This work was conducted during Qian Yang’s internship at Alibaba Group;

(2) Jin Xu, Alibaba Group, Equal contribution;

(3) Wenrui Liu, Zhejiang University;

(4) Yunfei Chu, Alibaba Group;

(5) Xiaohuan Zhou, Alibaba Group;

(6) Yichong Leng, Alibaba Group;

(7) Yuanjun Lv, Alibaba Group;

(8) Zhou Zhao, Alibaba Group and Corresponding to Zhou Zhao ([email protected]);

(9) Yichong Leng, Zhejiang University

(10) Chang Zhou, Alibaba Group and Corresponding to Chang Zhou ([email protected]);

(11) Jingren Zhou, Alibaba Group.

Table of Links

4 Experiments

4.3 Human Evaluation and 4.4 Ablation Study of Positional Bias

A Detailed Results of Foundation Benchmark

3 AIR-Bench

There exist three unique characteristics that differentiate AIR-Bench from existing benchmarks for audio understanding: i) AIR-Bench is the first work to incorporate task evaluation from all types of audio in a hierarchical taxonomy; ii) AIR-Bench is the first generative evaluation benchmark that handles the free-form output of LALMs; iii) AIRBench adopts GPT-4-based automatic evaluation yielding trustworthy evaluation results with affordable cost. In Sec. 3.1, we present the hierarchical taxonomy of AIR-Bench and discuss the design philosophy behind it. In Sec. 3.2 and Sec. 3.3, we introduce how we collect the audio-central question-answer pairs for foundation and chat tasks. In Sec. 3.4, we present the evaluation framework of AIR-Bench.

3.1 Overview

Chat interaction based on audio is a complex task that encompasses a variety of fundamental competencies. For instance, humans are able to respond to sound events due to their capacities for sound perception and common sense reasoning. Similarly, the ability to respond to others’ spoken words is predicated on foundational skills such as speech-totext recognition and emotion recognition. Based on the motivation, we propose the hierarchical benchmark AIR-Bench by dividing it into foundation and chat benchmarks. The fundamental one is designed to assess capabilities across individual subtasks, serving to diagnose weaknesses within the model, while the chat benchmark directly evaluates complicated audio-based open-ended questions. The data sample is denoted as (A, Q, R), where A denotes the audio, Q represents the query and R is the reference answer.

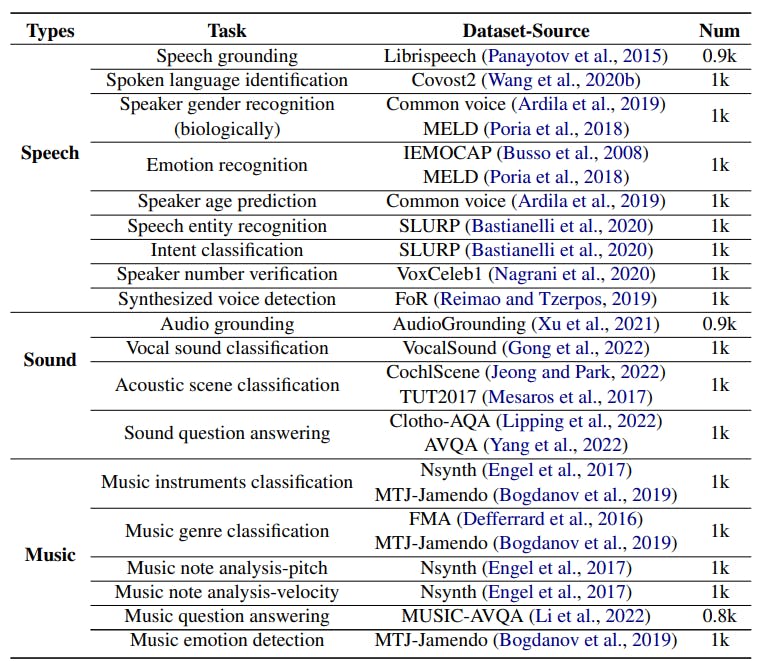

• Foundation benchmark: The purpose of the benchmark is to evaluate the individual capabilities of foundational tasks. To reduce the task difficulties and enable the evaluation of various models, we utilize the single-choice question-answering format. Specifically, the query Q is formed by concatenating a question q and candidate choices C, denoted as Q = (q, C). We curate a collection of 19 audio tasks that span multiple audio types, such as speech, music, and sound. These tasks include tasks like emotion recognition, acoustic scene classification, and music QA. [1]

• Chat benchmark: The benchmark encompasses any form of question and answer pairs that could arise from audio signals, with the aim of reflecting the model’s ability to genuinely follow user instructions to perform perceiving, reasoning and interacting within realworld applications. According to the type of audio, the benchmark is categorized into four dimensions: speech, sound, music, and mixed audio, where mixed audio refers to audio that is a mixture of multiple types of audio, such as human voice with background music.

The overview of AIR-Bench is shown in Fig. 1.

This paper is available on arxiv under CC BY 4.0 DEED license.

[1] For transcription tasks such as ASR and S2TT, we incorporate them into the chat benchmark since they are not suitable for the single-choice task format.